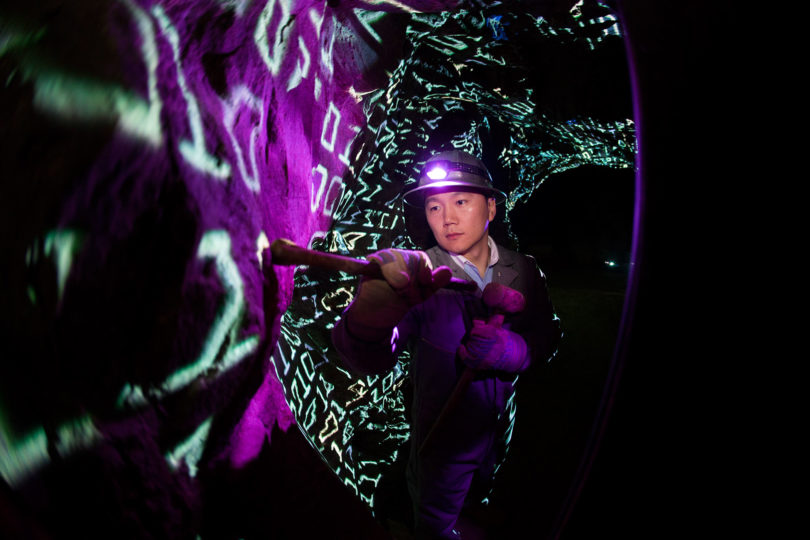

When Jaewoo Lee joined the computer science department in the Franklin College of Arts and Sciences in fall 2016, he brought expertise in a growing new field to UGA as part of the Presidential Informatics Hiring Initiative: data privacy.

“In 2009, when I started talking about data privacy, people would ask what is data privacy and what is that for?” said Lee, who arrived at UGA from a postdoctoral position at Penn State University.

It was at the beginning of his doctoral studies at Purdue University in 2009 that Lee began working in privacy-preserving data analysis, a field so new that it boasts few experts.

Data privacy is often confused with data security, which frequently uses encryption techniques to send and share data with authorized parties. Authorized recipients are provided a key or other ability to decrypt the message and use the data.

“So data security is binary-the encryption is either secure or it’s broken,” Lee said. Data security protocols are designed to protect data from an adversary. “But with data privacy, the audience or recipient is the public.”

People send personal information to services they use, whether eating or movie-viewing habits, and even health information. This information is used for marketing but also to improve services like mapping or restaurant reviews and to improve health diagnoses.

Data sets of private information such as medical records are regularly shared to provide statistical information about the data. Analysts survey the data to identify patterns to benefit companies or large groups of people. The challenge is to share the statistical information to learn as much as possible about a group without revealing information about individuals represented in the group.

The statistical science of learning about the group while protecting the information of individuals in the group is called differential privacy.

“So the information, when released, may potentially benefit many people,” Lee said. “But information is also stored now in many places at once, so the disclosure of the information could go beyond the expected recipients. Privacy preserving data analysis provides control on what information can be inferred from the information that is released.”

Lee said that privacy was less of a concern even 10 years ago, but with the development of deep machine learning (attempts to model high-level abstraction in data) and the ubiquity of big data, people have started to recognize the importance of privacy.

“Data privacy has extended its scope to all the fields that involve the data, from computer science theorists to biologists and genomists. Machine learning conferences have their own session for data privacy papers,” Lee said.

In service of training tomorrow’s analysts, Lee is designing and teaching a new course on data privacy to graduate students, positioning UGA among only a few universities offering instruction in data privacy.

“Deep learning and neural networks are very popular and regarded as very promising areas, but the privacy issues have not been fully handled as yet,” Lee said.

Graduates in this area will have knowledge of advanced data analysis techniques (neural networks, support vector machine and hidden markov models) and will be able to apply and implement them into computer programs.

His research involves developing algorithms that train the neural networks and simultaneously protect the privacy of the individuals in the data set. One promising application of private learning algorithms is medical image analysis. Analyzing medical imaging data is essential not only in medical research but also in diagnoses of disease; however, concerns on privacy of subjects are the main obstacle for the analysis.

Lee is currently working on a project with a multidisciplinary team that involves faculty in statistics and health communication departments at UGA.

“The problem is, how much accuracy can you get? The popularity of the neural network is based on its high accuracy, with a capacity far beyond the existing machine learning algorithms,” Lee said. “But to handle the privacy issue, we need to sacrifice some accuracy. So the question is, how much do we need to sacrifice?”